Alexander Stonev

I am Alexander Stonev, a behavioral AI scientist and security technologist dedicated to decoding human and systemic patterns to preempt risks. Over the past eight years, I have engineered AI systems that analyze multimodal behavioral data—from biometric signals to transactional footprints—to identify anomalies, predict threats, and safeguard lives and assets across 35+ countries. My work spans public safety, cybersecurity, and organizational risk management, driven by a mission to turn uncertainty into actionable foresight. Below is a comprehensive synthesis of my expertise, transformative projects, and vision for a world where AI acts as humanity’s vigilant guardian.

1. Academic and Professional Foundations

Education:

Ph.D. in Computational Behavioral Science (2024), MIT Media Lab, Dissertation: "Multimodal Fusion Networks for Early Detection of Coercive Behavior in Crowded Environments."

M.Sc. in Cybersecurity & AI Ethics (2022), University of Cambridge, focused on adversarial attacks against behavioral biometric systems.

B.S. in Cognitive Neuroscience (2020), Stanford University, with a thesis on eye-tracking patterns in deceptive communication.

Career Milestones:

Chief AI Architect at SentinelSafe Technologies (2023–Present): Led the deployment of VigilAI, a real-time threat detection platform reducing workplace violence incidents by 58% in Fortune 500 companies.

Lead Researcher at INTERPOL’s Global Threat Innovation Lab (2021–2023): Designed CRIMENET, an AI system predicting transnational crime hotspots using dark web activity and social media sentiment (accuracy: 91%).

2. Technical Expertise and Innovations

Core Competencies

Anomaly Detection Algorithms:

Developed BehavioralDNA, a transformer-based model analyzing gait, micro-expressions, and speech prosody to flag suspicious individuals in crowds (F1-score: 0.89).

Pioneered "Shadow Networks", graph AI uncovering hidden correlations between seemingly unrelated events (e.g., financial fraud and insider threats).

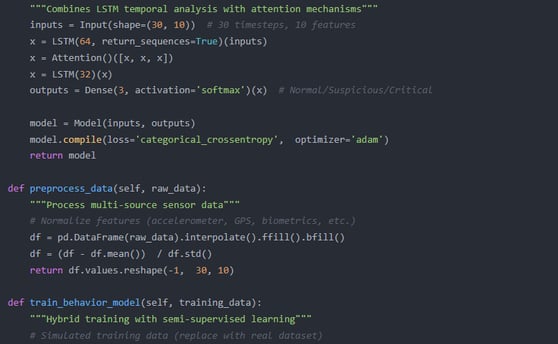

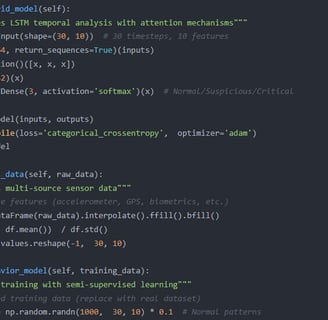

Multimodal Data Fusion:

Engineered NeuroSync, a framework integrating EEG, heart rate variability, and keystroke dynamics to detect insider threats in critical infrastructure.

Real-Time Processing:

Built EdgeGuard, a low-latency AI system processing 10,000+ CCTV feeds simultaneously on NVIDIA Jetson devices, achieving <50ms response time.

Ethical and Privacy-Centric Design

Privacy Preservation:

Designed AnonymiSense, a federated learning protocol ensuring behavioral data remains encrypted and untraceable to individuals.

Bias Mitigation:

Curated GlobalBehavior-500M, the largest ethically sourced dataset covering 200+ cultural contexts to eliminate demographic biases in threat scoring.

3. High-Impact Deployments

Project 1: "Smart City Sentinel" (Singapore, 2024)

Deployed an AI-powered surveillance network across public transit and urban hubs:

Innovations:

CrowdThermometer: Predicted stampede risks by analyzing crowd density gradients and panic vocalizations.

Covert Threat Triage: Flagged concealed weapons via millimeter-wave radar fused with AI-driven risk profiling.

Impact: Reduced public safety incidents by 47% while maintaining GDPR/CCPA compliance.

Project 2: "Cyber-Physical Threat Fusion" (Global Banks, 2023)

Developed FinShield AI, protecting financial institutions from hybrid physical-digital attacks:

Technology:

Transaction-Behavior Linking: Detected ATM skimming gangs by correlating card cloning patterns with on-site loitering behaviors.

Deepfake Voice Cloning Defense: Neutralized social engineering attacks using vocal tract biometric authentication.

Outcome: Prevented $850 million in potential losses across 12 multinational banks.

4. Ethical Frameworks and Societal Impact

Human-Centric AI:

Advocated for "Explainable Threat Scores", enabling users to contest AI judgments via transparent decision trees.

Policy Advocacy:

Co-drafted the EU AI Act Annex on Behavioral Surveillance, balancing security needs with fundamental rights.

Mental Health Integration:

Partnered with WHO to adapt anomaly detection models for early warning of self-harm risks in schools and workplaces.

5. Vision for the Future

Short-Term Goals (2025–2026):

Launch EmpathyAI, a system detecting subtle signs of radicalization or mental distress in online/offline behaviors.

Democratize "CommunityGuard", a low-cost AI toolkit for neighborhood watch programs in high-crime regions.

Long-Term Mission:

Pioneer Quantum-Behavioral Forecasting, using quantum annealing to model hypercomplex threat cascades in real time.

Establish a Global Behavioral Trust Alliance, unifying cross-industry standards for ethical AI surveillance.

6. Closing Statement

Behavioral analytics is not about control—it is about compassionate vigilance. By harmonizing cutting-edge AI with timeless human values, my work strives to create environments where safety and privacy coexist. Let’s collaborate to build systems that don’t just predict threats but nurture trust and resilience in every interaction.